Running Node.js tests in parallel using GitHub Actions

In several UI repositories using GitHub Actions, that I have worked on, I encountered extended CI durations. The total execution times occasionally reached up to 20 minutes. Notably, a significant portion of this time was consumed by the execution of the E2E and unit tests in Node.js, which could individually take up to 17 minutes to complete.

Hence, I decided to adapt the CI practices for the unit and E2E tests, based on the great strategies outlined by Cully Larson in their article. Their main idea was to divide the workflow into multiple jobs, segment tests to enable parallel execution, and established a job matrix for concurrent execution of Cypress E2E tests. I highly recommend checking out the referenced article BEFORE reading this one.

The main contributions include:

making the original scripts (cypress-spec-split.ts and cypress-ci-run.ts) adaptable for different test types beyond Cypress;

enabling devs to easily configure the number of parallel job versions for their tests in the CI workflow.

Splitting the tests based on a given spec pattern

In my case, I needed to split any type of test files, not just Cypress test files. So, as a first step, I made modifications to the spec-split script, specifically enhancing its functionality to dynamically split the tests based on a given spec file pattern.

For example, for the unit tests, I used the command node spec-split.ts 3 1 src/**/.test.ts. For E2E tests, I ran node spec-split.ts 3 1 src/**/.test.ts cypress/e2e/**/*cy.{ts,js}. In both cases, the '3' indicates the total number of jobs, and '1' tells us which job it is (starting from 0). The second parameter is needed to make sure each job gets its own unique set of tests. The third parameter specifies the test files pattern.

// spec-split.js

const fs = require('fs/promises')

const globby = require('globby')

const minimatch = require('minimatch')

const specPatterns = {

excludeSpecPattern: ['tsconfig.json']

}

const testPattern = /(^|\s)(it|test)\(/g

const isCli = require.main?.filename === __filename

function getArgs () {

const [totalRunnersStr, thisRunnerStr, pattern] = process.argv.splice(2)

if (!totalRunnersStr || !thisRunnerStr) {

throw new Error('Missing arguments')

}

const totalRunners = totalRunnersStr ? Number(totalRunnersStr) : 0

const thisRunner = thisRunnerStr ? Number(thisRunnerStr) : 0

if (isNaN(totalRunners)) {

throw new Error('Invalid total runners.')

}

if (isNaN(thisRunner)) {

throw new Error('Invalid runner.')

}

return { totalRunners, thisRunner, pattern }

}

async function getTestCount (filePath) {

const content = await fs.readFile(filePath, 'utf8')

return content.match(testPattern)?.length || 0

}

async function getSpecFilePaths (pattern) {

const options = { ...specPatterns, specPattern: pattern }

const files = await globby(options.specPattern, {

ignore: options.excludeSpecPattern

})

const ignorePatterns = [...(options.excludeSpecPattern || [])]

const doesNotMatchAllIgnoredPatterns = (file) => {

const MINIMATCH_OPTIONS = { dot: true, matchBase: true }

return ignorePatterns.every((pattern) => !minimatch(file, pattern, MINIMATCH_OPTIONS))

}

return files.filter(doesNotMatchAllIgnoredPatterns)

}

async function sortSpecFilesByTestCount (specPathsOriginal) {

const specPaths = [...specPathsOriginal]

const testPerSpec = {}

for (const specPath of specPaths) {

testPerSpec[specPath] = await getTestCount(specPath)

}

return (

Object.entries(testPerSpec)

.sort((a, b) => b[1] - a[1])

.map((x) => x[0])

)

}

function splitSpecs (specs, totalRunners, thisRunner) {

return specs.filter((_, index) => index % totalRunners === thisRunner)

}

(async () => {

if (!isCli) return

try {

const { totalRunners, thisRunner, pattern } = getArgs()

const specFilePaths = await sortSpecFilesByTestCount(await getSpecFilePaths(pattern))

if (!specFilePaths.length) {

throw Error('No spec files found.')

}

const specsToRun = splitSpecs(specFilePaths, totalRunners, thisRunner)

console.log(specsToRun.join(' '))

} catch (err) {

console.error(err)

process.exit(1)

}

})()The next step I took, involved configuring the script for running the tests in CI to execute any test command with a specified test file pattern. The script now:

Pulls the total number of jobs (

totalRunners), the number of this job (thisRunner), test file pattern (pattern) and the test command (testCommand) from environment variables;Executes the testCommand.

// ci-run.ts

const exec = require('child_process').exec

const execSync = require('child_process').execSync

interface GetEnvOptions {

required?: boolean

}

function getEnvNumber (varName: string, { required = false }: GetEnvOptions = {}): number {

if (required && process.env[varName] === undefined) {

throw Error(`${varName} is not set.`)

}

const value = Number(process.env[varName])

if (isNaN(value)) {

throw Error(`${varName} is not a number.`)

}

return value

}

function getEnvString (varName: string, { required = false }: GetEnvOptions = {}): string {

if (required && process.env[varName] === undefined) {

throw Error(`${varName} is not set.`)

}

return process.env[varName]

}

function getArgs () {

return {

totalRunners: getEnvNumber('TOTAL_RUNNERS', { required: true }),

thisRunner: getEnvNumber('THIS_RUNNER', { required: true }),

pattern: getEnvString('TEST_FILES_PATTERN', { required: true }),

testCommand: getEnvString('TEST_COMMAND', { required: true })

}

}

(async () => {

try {

const { totalRunners, thisRunner, pattern, testCommand } = getArgs()

const splitCommand = `node scripts/spec-split.js ${totalRunners} ${thisRunner} ${pattern}`

const filePaths = execSync(splitCommand)

const command = `${testCommand} ${filePaths.toString()}`

console.log(`Running: ${command}`)

const commandProcess = exec(command)

if (commandProcess.stdout) {

commandProcess.stdout.pipe(process.stdout)

}

if (commandProcess.stderr) {

commandProcess.stderr.pipe(process.stderr)

}

commandProcess.on('exit', (code) => {

process.exit(code || 0)

})

} catch (err) {

console.error(err)

process.exit(1)

}

})()Both scripts are located in scripts/spec-split.js and scripts/ci-run.ts (relative to the root of the project).

CI workflow using GitHub Actions

In the CI workflows affected with longer duration times, the unit tests and E2E tests are ran in separate jobs. I retained the flexibility of running multiple versions of each job by employing a matrix strategy from the original article.

In each job’s env section, I have included additional environment variables besidesTOTAL_RUNNERS (number of parallel versions of the job) and THIS_RUNNER (current parallel version).

TEST_COMMAND: it defines the specific test command that needs to be executed. In case of the E2E tests, it is set to "cypress run --spec," indicating the command for running Cypress tests.TEST_FILES_PATTERN: it specifies the pattern for identifying the test files that need to be split and executed. In case of the E2E tests, it is set to "cypress/e2e/**/*.cy.{ts,tsx,js,jsx}," allowing for the selection of relevant test files

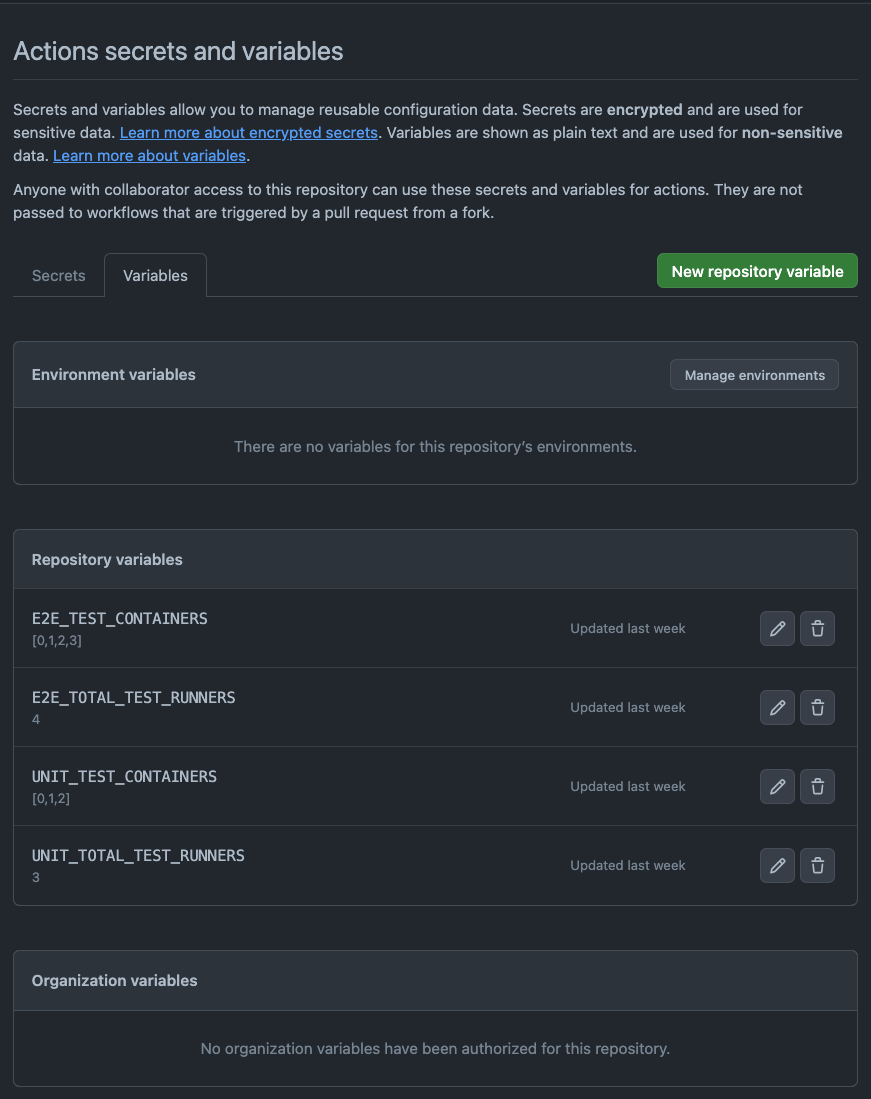

To make the number of job versions and the matrix container easily configurable in the CI workflow, I created specific action variables within the repository, as outlined below. This approach offers the advantage of easily adjusting the parallel versions, allowing for a faster CI process in case of high incidents, albeit potentially resulting in higher billable costs; respectively a slower CI otherwise. One might ask: what occurs if, by accident, someone fails to provide a numerical value? Will the CI still function without file splitting? The answer: this feature is a Work in Progress.

One of the simplied CI workflows for Jest and Cypress tests looks like this:

name: CI

on:

push:

branches-ignore:

- 'release-**'

- 'dependabot/**'

tags-ignore: '**'

env:

NPMRC: '//registry.npmjs.org/:_authToken=somethingReallySecret'

jobs:

unit-tests:

runs-on: ubuntu-latest

strategy:

matrix:

# Run copies of the current job in parallel. These need to be a

# continuous series of numbers, starting with `0`. If you change the

# number of containers, change TOTAL_RUNNERS below. e.g. [0, 1, 2]

containers: ${{ fromJson(vars.UNIT_TEST_CONTAINERS) }}

steps:

- uses: actions/checkout@v3

- name: Setup Node.js

uses: actions/setup-node@v3

with:

node-version-file: .nvmrc

cache: npm

- name: Install Packages

run: |

npm config set ${{ env.NPMRC }}

npm ci

- name: Run unit test

run: npm run test:ci

env:

# the number of containers in the job matrix

TOTAL_RUNNERS: ${{ vars.UNIT_TOTAL_TEST_RUNNERS }}

THIS_RUNNER: ${{ matrix.containers }}

TEST_FILES_PATTERN: "src/**/*.test.ts"

TEST_COMMAND: "TZ=UTC jest --runInBand --findRelatedTests"

e2e-tests:

name: e2e Tests

runs-on: ubuntu-latest

strategy:

fail-fast: false

matrix:

containers: ${{ fromJson(vars.E2E_TEST_CONTAINERS) }}

steps:

- uses: actions/checkout@v3

- name: Setup Node.js

uses: actions/setup-node@v3

with:

node-version-file: .nvmrc

cache: npm

- name: Install Packages

run: |

npm config set ${{ env.NPMRC }}

npm ci

- name: Run Cypress e2e tests

uses: cypress-io/github-action@v5

with:

install: false

command: npm run test:ui-parallel

env:

TOTAL_RUNNERS: ${{ vars.E2E_TOTAL_TEST_RUNNERS }}

THIS_RUNNER: ${{ matrix.containers }}

TEST_FILES_PATTERN: "cypress/e2e/**/*.cy.{ts,tsx,js,jsx}"

TEST_COMMAND: "cypress run --spec"

- name: Upload Cypress Screenshots

uses: actions/upload-artifact@v3

if: failure()

with:

name: cypress-screenshots

path: cypress/screenshotsThe commands used for running the unit tests and E2E tests are specified in the package.json file as follows:

# cypress specific

"test:ui-parallel": "start-server-and-test start http-get://localhost:4200 test:ci",

# general

"test:ci": "ts-node scripts/ci-run.ts",Results and Findings

I have successfully applied this solution in various production apps. In one instance, CI runtimes dropped from 20–30 minutes to 13 minutes, reducing billable time by 10 minutes (from 52 to 42 minutes). However, in other cases, billable time remained unchanged. As a note, from my understanding, GitHub Actions accumulates the times of all runners and rounds them, and since our total sum of each runner's total time was less than that of the single runner, we ultimately incurred lower billable costs.

I typically set 2 to 4 concurrent jobs by default per type of testing (unit or E2E), but the exact number depends on the specific UI repository. As a general guideline, I increase the number of runners when a repository has a larger number of tests to help reduce the overall testing time. Also, as it was noted in Cully Larson’s article, GitHub Actions’ run times tend to vary, making these values approximate rather than exact. Note that the number of jobs we can run concurrently across all repositories in our user or organization account depends on our GitHub plan.

Moreover, to maintain a reasonably balanced workload distribution among the runners, I took measures to ensure that each test file contained a comparable number of tests, and that each runner handled a roughly equal number of test files. As a result, I was able to significantly reduce not only the total duration but also total billable time for the CI workflow.

I also fine-tuned the features of each testing framework to enhance the CI durations. In the case of the Jest tests with ts-jest, I optimized performance by disabling type checking using ‘isolatedModules’: true. I also plan to try out swc-jest as well. For the E2E tests in Cypress, I configured them with test retries, allowing us to mitigate test flakiness and reduce the occurrence of CI build failures.

Generally speaking, adding too many runners can increase billable time due to setup overhead, but the trade-off is vital to consider. If the Node.js test parallelization saves us 10 minutes each run, for instance, reducing execution time from 17 minutes to 7 minutes but billing us for 27, it is essential to weigh the cost of those 10 minutes against having an idle developer waiting for a longer feedback loop.

Opting to have our developer wait an additional 10 minutes for a test job to run sequentially instead of in parallel may result, for example, in a $1 cost savings in job execution. However, it is essential to consider the developer's idle time, which amounts to $4.17 (assuming an annual salary of $50,000 and 2,000 hours worked per year). It could be that the developer won’t be waiting for every job run. But it could also be that they end up waiting more frequently than once out of every 20 commits to test actual deployments. In the last case, the decision to switch to sequential processing could become financially burdensome.

In my opinion, opting to pay for GitHub Actions is the pragmatic choice for tests, saving both money and developer frustration, but we need to make sure to assess each situation individually. Wenqi Glantz in their article shared further insights about the possible hidden cost of parallel processing in GitHub Actions.

I look forward to your thoughts, concerns, inquiries, or any insights you would like to impart. Please do not hesitate to share them.